Post-deployment verification

In this section, we'll see how to request metadata as part of the API response so that we can get the LLMChallenge log and costs.

Enabling return of metadata in the API response

Once the Prompt Studio project has been deployed as an API and you make an API call, you can include the include_metadata parameter to it so that it returns details on LLMChallenge and also how much the challenge cost you.

LLMChallenge metadata in the API response

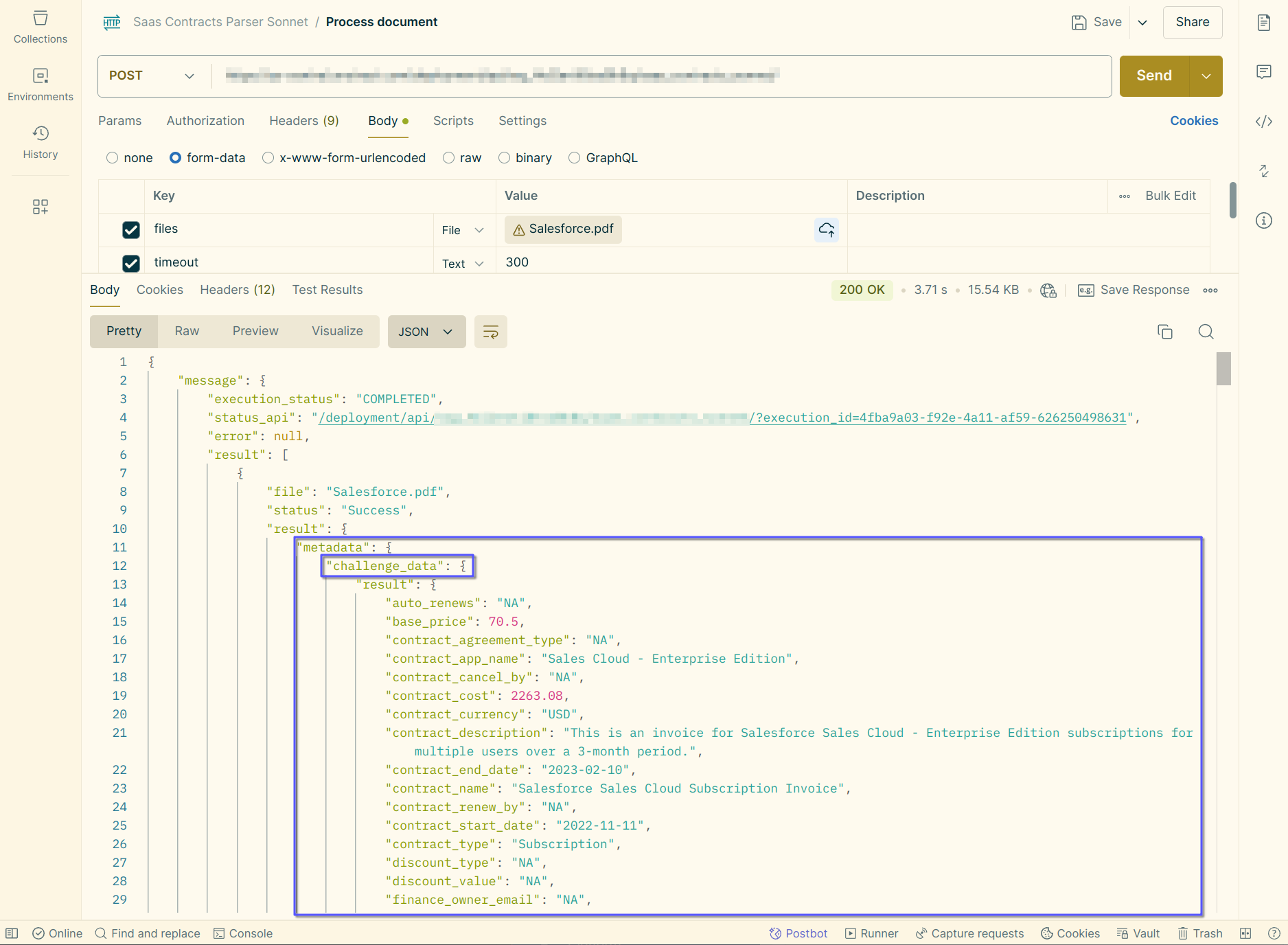

In the screenshot below, you can see that as part of the API response metadata, the LLMChallenge log is included. This can be very useful in debugging LLMChallenge should you need to. You can see that the LLMChallenge log is contained under the challenge_data key in the response JSON.

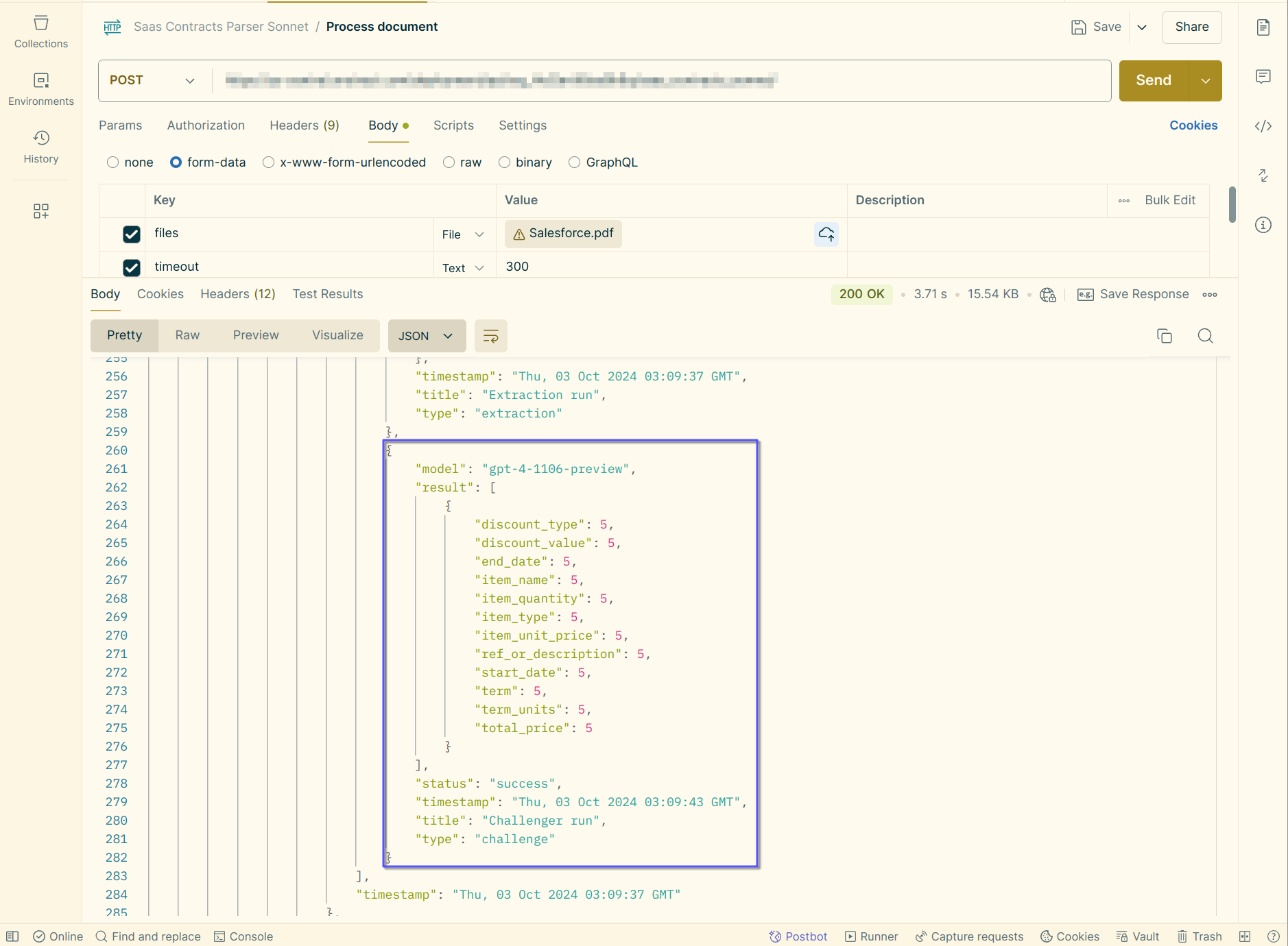

You can also see the scores that the challenger LLM is generating against the responses of the extractor LLM:

LLMChallenge cost

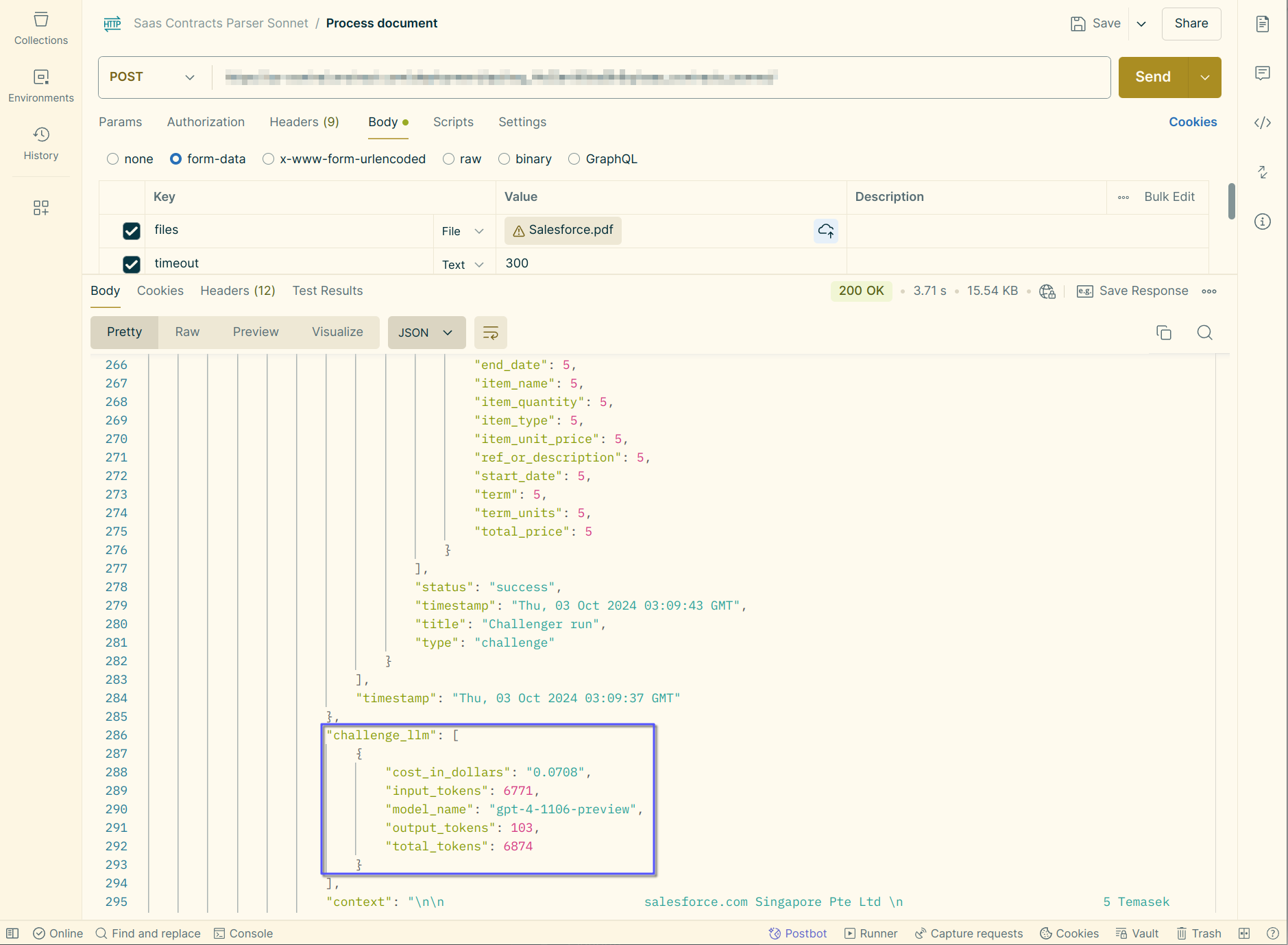

When API metadata is enabled, you'll also get the tokens spent on the challenger LLM along with a cost estimate, as seen in the screenshot below.

For a more detailed guide on calculating the total cost of extraction, please see the Calculating Extraction Costs user guide.