Amazon Bedrock Cross-Region Inference

📘 Introduction

Amazon Bedrock Cross-Region Inference allows you to access foundation models across different AWS regions, providing enhanced flexibility and operational benefits for your AI applications.

Why Cross-Region Inference is Required

Cross-Region Inference addresses several critical business and technical requirements:

- Model Availability – Some foundation models may not be available in all AWS regions. Cross-region access ensures you can use the specific models your application requires regardless of your primary region.

- Latency Optimization – Accessing models in regions closer to your end users reduces response times and improves UX.

- Disaster Recovery – Provides redundancy and failover options across AWS regions.

- Compliance and Data Residency – Choose regions that meet your specific regulatory or data residency needs.

- Cost Optimization – Choose the most cost-effective region based on pricing or capacity.

- Load Distribution – Distribute workloads to avoid service limits and ensure performance.

🛠️ Step 1: Accessing Cross-Region Inference in Bedrock Console

✅ Prerequisites

- AWS account with necessary permissions

- Knowledge of model regions (e.g., Claude 3 in

us-east-1, Llama 3 inus-west-2) - IAM user/role with Bedrock permissions

🚀 Access Bedrock Console

- Sign in to AWS Console

- Search for Amazon Bedrock and open it

- Switch to the region where your model resides (e.g.,

us-east-1)

🌐 Navigate to Cross-Region Inference

- In the left menu, click Model access or Cross-region inference (if visible)

- View models and regions in the access matrix

- Enable cross-region access via toggle/button if available

🔓 Step 2: Request Access to Models

✅ Enable Cross-Region Inference

- Enable the cross-region inference option in the Bedrock console

- Accept the terms and conditions

📍 Select Regions and Models

- Pick primary and secondary regions

- Select required foundation models (e.g., Claude 3, Titan)

- Click Request Access for each model if not already enabled

📈 Monitor Access Status

-

Use the status matrix to check:

- ✅ Access Granted

- ⏳ Pending Approval

- ❌ Denied

🔐 Step 3: IAM Setup for Cross-Region Inference

✅ Create IAM User or Role

- Go to IAM Console

- Create a user with Programmatic Access or role for Lambda/SageMaker

📜 Attach Permissions

Use the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"bedrock:InvokeModel",

"bedrock:InvokeModelWithResponseStream"

],

"Resource": [

"arn:aws:bedrock:us-east-1:*:model/*",

"arn:aws:bedrock:us-west-2:*:model/*",

"arn:aws:bedrock:eu-central-1:*:model/*",

"arn:aws:bedrock:ap-northeast-1:*:model/*"

]

},

{

"Effect": "Allow",

"Action": [

"bedrock:ListFoundationModels",

"bedrock:GetFoundationModel"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"bedrock:ListCrossRegionInferenceProfiles",

"bedrock:GetCrossRegionInferenceProfile"

],

"Resource": "*"

}

]

}

🧪 Step 4: Test Cross-Region Inference

✅ Python SDK Example

import boto3

import json

bedrock = boto3.client(

service_name='bedrock-runtime',

region_name='us-east-1'

)

response = bedrock.invoke_model(

modelId='anthropic.claude-3-sonnet-20240229-v1:0',

body=json.dumps({

"prompt": "Explain AI governance.",

"max_tokens_to_sample": 300

}),

contentType='application/json',

accept='application/json'

)

print(response['body'].read().decode())

✅ AWS CLI Example

aws bedrock-runtime invoke-model \

--region us-east-1 \

--model-id anthropic.claude-3-sonnet-20240229-v1:0 \

--content-type application/json \

--accept application/json \

--body '{"prompt":"Hello Claude","max_tokens_to_sample":300}' \

output.json

💸 Step 5: Pricing & Considerations

- Bedrock pricing is per 1,000 tokens for input/output

- No extra charges for using cross-region inference

- Data transfer between regions may incur standard AWS fees

🧰 Step 6: Integration with Unstract

To use this model in Unstract:

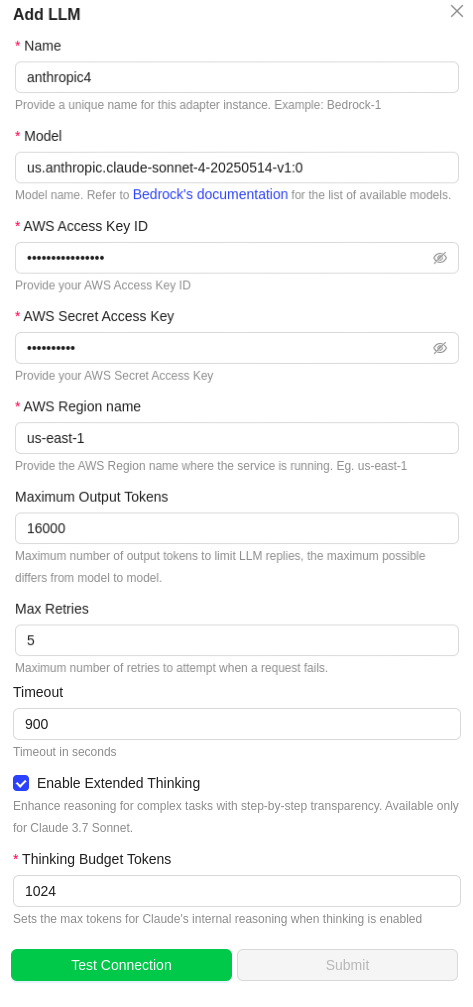

Extended Thinking Configuration

If you're using Anthropic Claude 3.7 Sonnet through Bedrock, you'll have additional options:

Enable Extended Thinking

- ☑️ Check this option to activate enhanced reasoning with step-by-step transparency

- Available for Claude 3.7 Sonnet and newer versions

Thinking Budget Tokens

- 🎯 Token Budget: Set the maximum tokens for Claude's internal reasoning

- 💡 Recommendation: Start with 5000 tokens for most use cases

- 📈 Higher Budget: More tokens = more detailed reasoning but increased costs

⚠️ Important: Extended thinking consumes additional tokens, which will increase your AWS usage costs

- Go to Settings > LLMs

- Select New LLM Profile

- Select Amazon Bedrock

- Configure:

- LLM Name: Bedrock

- Region: The region in which model is enabled

- Model Name & Access Keys

- Optional: For Claude 3.7 Sonnet and newer: Enable extended thinking and set your preferred thinking budget tokens

- Test and Save