Deploying LLMChallenge

We discussed earlier in Developing and verifying LLMChallenge that while in Prompt Studio, you're only verifying if LLMChallenge is doing a good job of required the structured extraction. LLMChallenge needs to be deployed as part of API Deployments, ETL Pipelines, Human Quality Review or Task Pipelines workflow. In this section, let's see how, when deploying one of the previously mentioned types of workflows, we can enable LLMChallenge.

To understand this section fully, you need to already know how Workflows work in Unstract.

Enabling LLMChallenge

It's pretty simple, really:

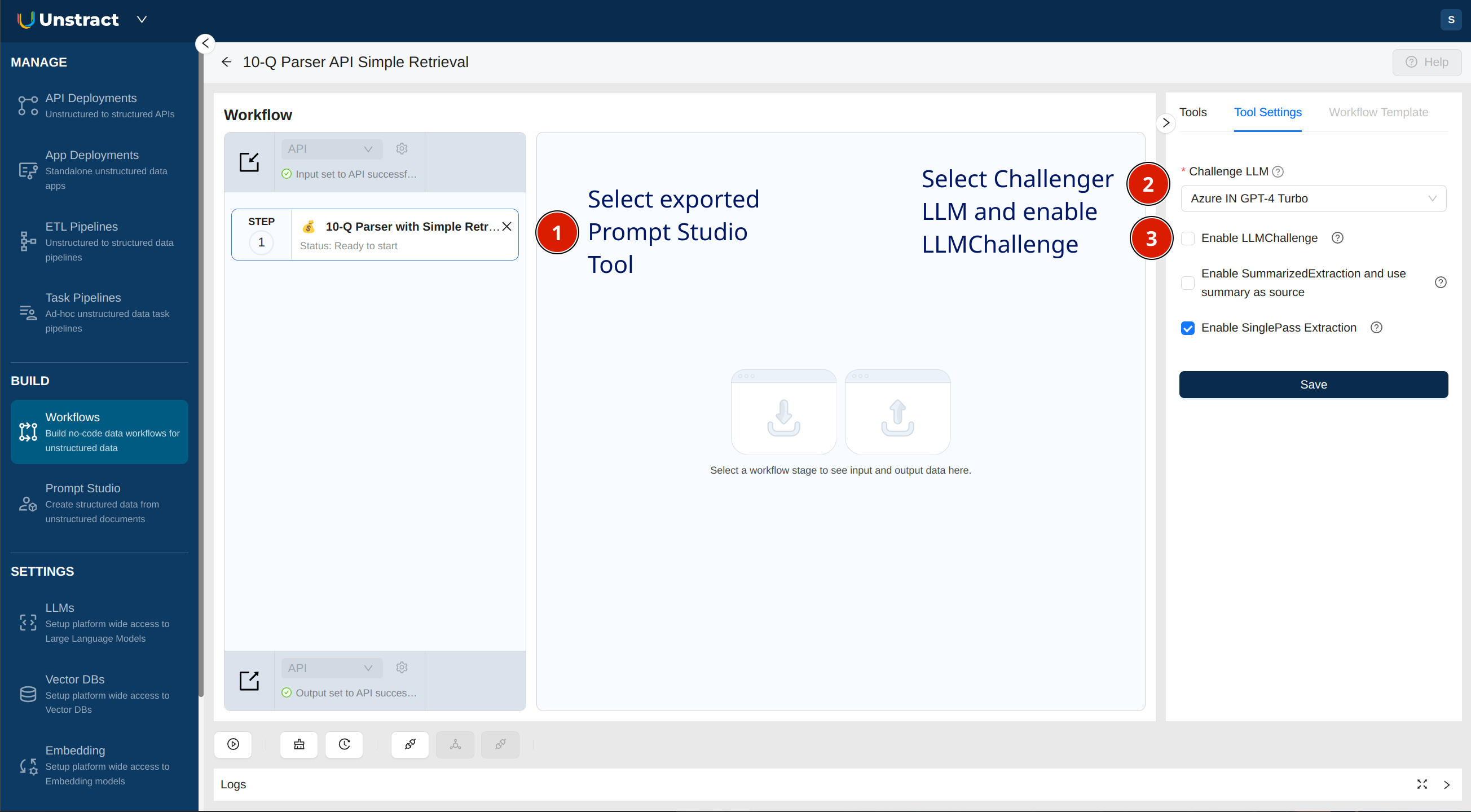

- First, open any Workflow project in which you want to enable LLMChallenge.

- Select the exported Prompt Studio project Tool from the Workflow steps.

- Choose a challenger LLM from the list of available LLMs.

- Enable the LLMChallenge checkbox from the Tool Settings panel on the right.

Once this is done, this workflow has LLMChallenge enabled. Irrespective of whether it's an API, ETL Pipeline, Human Quality Review or Task Pipeline, when the extraction actually occurs and the output from the extraction LLM is available, the LLMChallenge process kicks in increasing the accuracy and trust of the extraction.