Developing and verifying LLMChallenge

When developing your prompts in Prompt Studio, you can enable LLMChallenge and see how it affects your extractions. Of course, in Prompt Studio, you're only developing prompts and defining the extraction schema. Ultimately, LLMChallenge runs as part of a deployed Prompt Studio project as one of the following integration types:

However, as you engineer your prompts in Prompt Studio, it's important to verify if LLMChallenge is working as expected. For this reason, you can turn it on and also inspect the LLMChallenge log in the Prompt Studio UI.

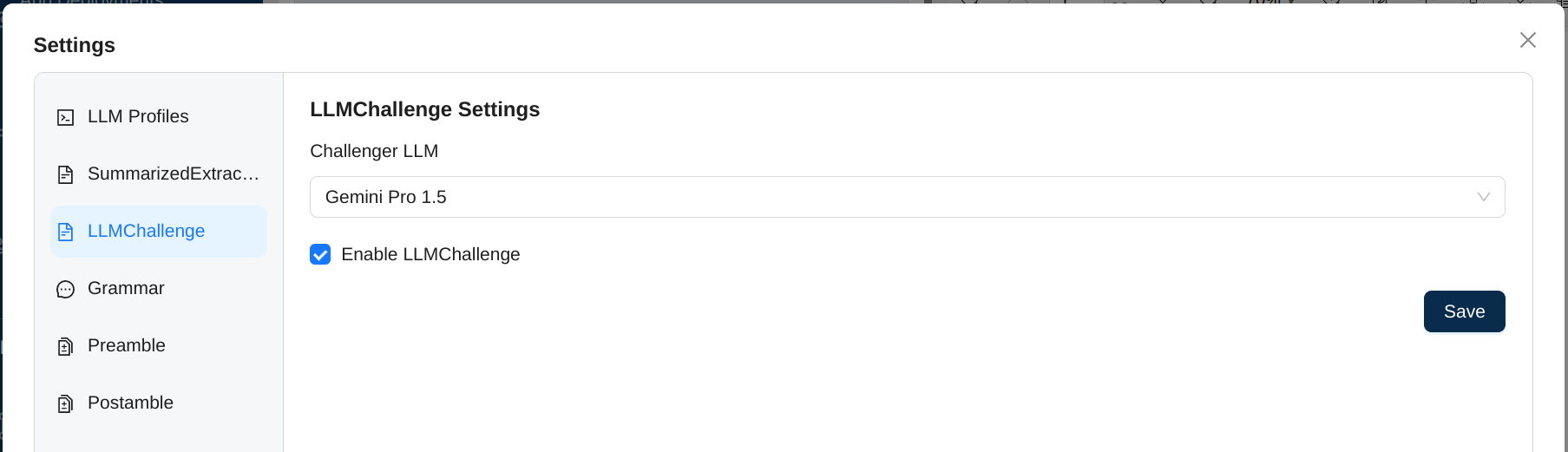

Turning on LLMChallenge

To turn on LLMChallenge, in any Prompt Studio project, go to Settings🞂LLMChallenge, select an LLM from the Challenger LLM list. Then, click on the Enable LLMChallenge checkbox.

Choosing a challenger LLM

Ideally, the Large Language Model chosen as the challenger should have 2 following characteristics. Without this, you should expect LLMChallenge not to give you the results you expect:

- The challenger model should be a powerful, large model that is considered a flagship model. At the time of this writing, one can consider models like GPT-4o, Anthropic Claude Sonnet 3.5, Gemini Pro 1.5 and Meta Llama 3.2 to be such models.

- Ensure that the extractor LLM and the challenger LLM are from different vendors. For instance, using Anthropic Claude Sonnet 3.5 for extraction and Meta Llama 3.2 for challenging it is an example of a good set up. Both these models are available as part of AWS Bedrock and can be easily added to Unstract.

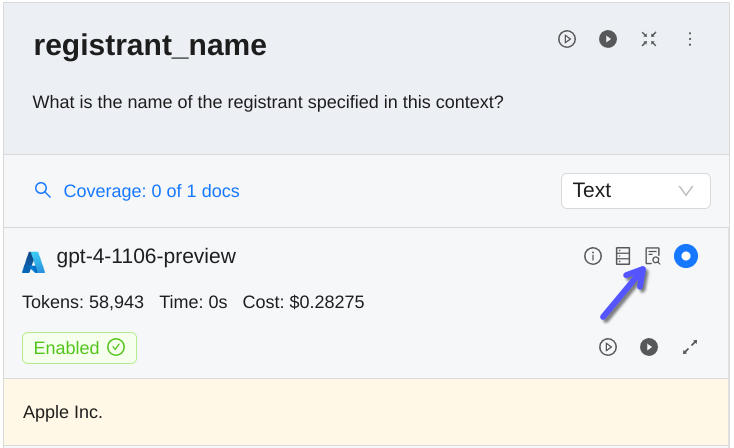

Inspecting the LLMChallenge log

The LLMChallenge log can be accessed by clicking on its designated button as illustrated in the screenshot below.

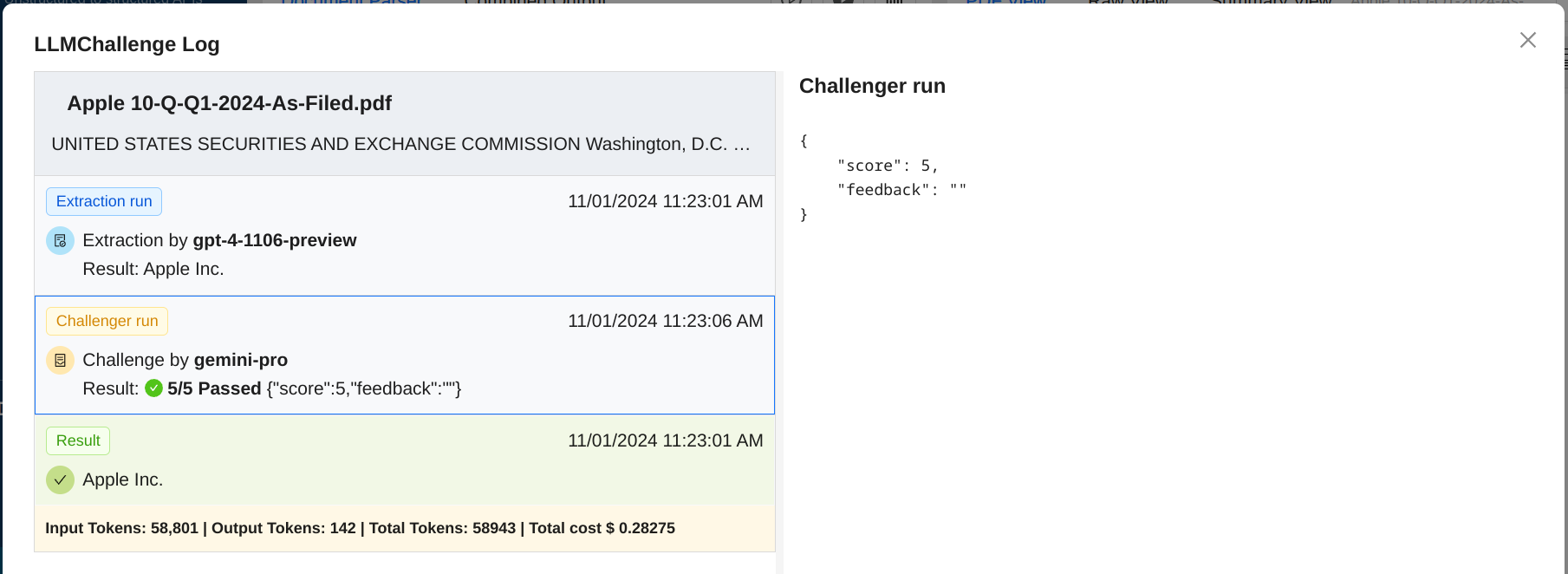

Clicking on this button brings up the LLMChallenge log dialog. You can inspect the consensus conversation between the extractor LLM and the challenger LLM in this dialog.

In the screenshot above, we're able to see the score the challenger LLM, in this case Gemini Pro 1.5 gave the extractor LLM, GPT-4-Turbo. By clicking on the stage of the LLMChallenge conversation on the left, you can inspect the result of that stage, which is shown in the left pane.